Ben Gravy

My favourite YouTuber is Ben Gravy, the semi-pro surfer who’s specialty is surfing novelty waves all around the world. His claim to fame is having surfed in every state in the US – even the landlocked ones. Well worth a watch if you like surfing or just generally positive affirming content.

The idea

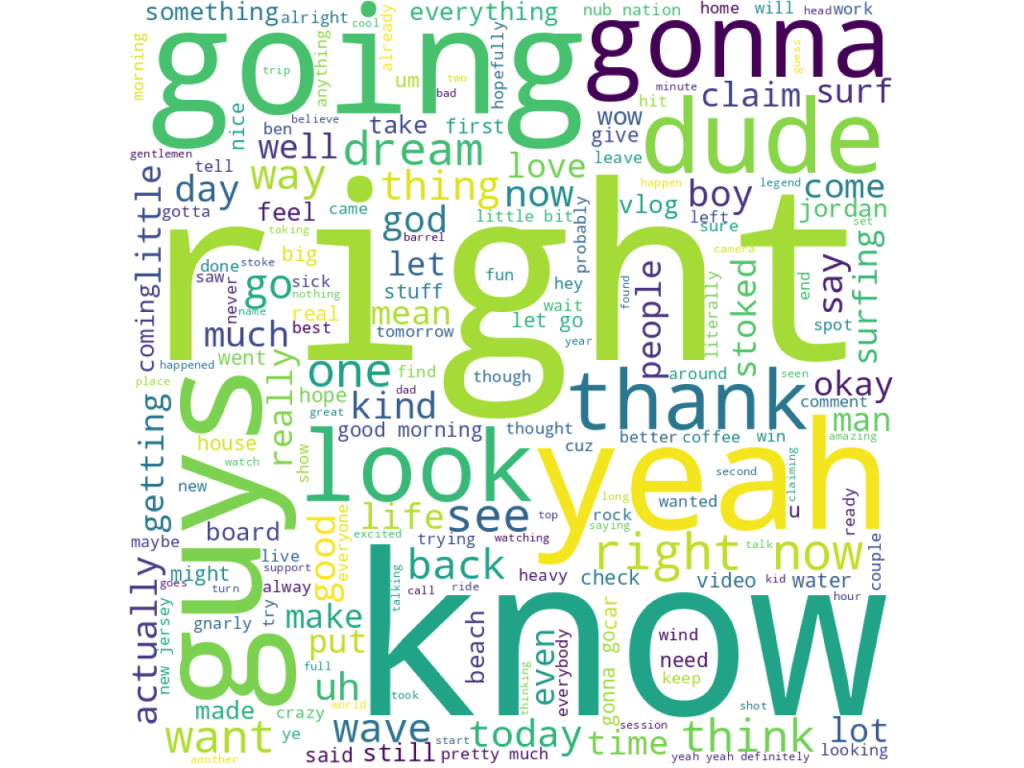

I wanted to make a poster with a word cloud to share with Ben, a kind of overview of the content he is producing, in text format. In order for this to be meaningful, I thought I should try to use the transcripts from every single video on his channel to produce this word cloud. “Word Cloud” by the way is a program which takes the most common words from any text and creates an image with those words, sized according to frequency of use.

Steps:

1. Download list of video’s from the channel

For this step, a lot of tutorials online recommend the YouTube Data api. I had a look at this option but it seemed an unnecessary complication considering this is a once-off action. I went with my favourite YouTube downloader, youtube-dl – which happens to have an option to simply list the video names.

youtube-dl --get-id "https://www.youtube.com/c/BenGravyy" > gravyy_vids.txtThis took a long time – Ben Gravy has more than a thousand video’s on his channel, from his many years of vlogging.

2. Get the transcripts

YouTube has for some time now automatically transcribed all video’s uploaded to it’s platform. It is possible to edit this transcription if you are the uploader – I am not sure if Ben has done this, but from a cursory examination the transcripts seemed pretty accurate. *Some phrases were labeled “foreign” which I assume is YouTube saying that it didn’t understand. I had to ignore those.

Here is how to get a transcript for one video, using Python:

*credit: https://pythonalgos.com/download-a-youtube-transcript-in-3-lines-of-python

from youtube_transcript_api import YouTubeTranscriptApi

import json

_id = "9uPIXpb9QWk"

transcript = YouTubeTranscriptApi.get_transcript(_id)

with open(f'test.json', 'w', encoding='utf-8') as json_file:

json.dump(transcript, json_file)Of course I had get multiple transcripts, using my previously downloaded gravyy_vids.txt file:

from youtube_transcript_api import YouTubeTranscriptApi

import json

# generate _ids from lines in file

with open('gravyy_vids.txt') as f:

_ids = [line.rstrip() for line in f]

for index, _id in enumerate(_ids):

try:

filename = "test" + f"{index}"

transcript = YouTubeTranscriptApi.get_transcript(_id)

with open(f'{filename}.json', 'w', encoding='utf-8') as json_file:

json.dump(transcript, json_file)

except:

print(f"playlist {index} not valid")This code generates a lot of .json files, each of which contains the transcript for one video. Now I have literally every word that Ben Gravy ever said in his video’s on file!

wordcloud

The next step involves doing a bit of data wrangling to get all of the text from the .json files into the correct format for word cloud to work. I also ignore the “foreign” keyword, as well as “[” which shows up in “[music]” which the transcript shows when music is playing.

Word cloud automatically displays the image when the data is ready, with an option to save.

# Python program to generate WordCloud from multiple YouTube transcripts

from wordcloud import WordCloud, STOPWORDS

import matplotlib.pyplot as plt

import pandas as pd

import glob

read_files = glob.glob("*.json")

comment_words = ''

stopwords = set(STOPWORDS)

for f in read_files:

df = pd.read_json(f, encoding ="latin-1")

for val in df.text:

# typecaste each val to string

val = str(val)

if '[' in val or val == "foreign":

pass

else:

# split the value

tokens = val.split()

# Convert each token into lowercase

for i in range(len(tokens)):

tokens[i] = tokens[i].lower()

comment_words += " ".join(tokens)+" "

wordcloud = WordCloud(width = 800, height = 800,

background_color ='white',

stopwords = stopwords,

min_font_size = 10).generate(comment_words)

# plot the WordCloud image #todo: surfboard contour! https://towardsdatascience.com/how-to-create-beautiful-word-clouds-in-python-cfcf85141214

plt.figure(figsize = (8, 8), facecolor = None)

plt.imshow(wordcloud)

plt.axis("off")

plt.tight_layout(pad = 0)

plt.show()I made a note to make a contour (shape outline for the words to go inside) which is most likely going to be a surfboard shape. You can learn more about masking here: https://towardsdatascience.com/how-to-create-beautiful-word-clouds-in-python-cfcf85141214

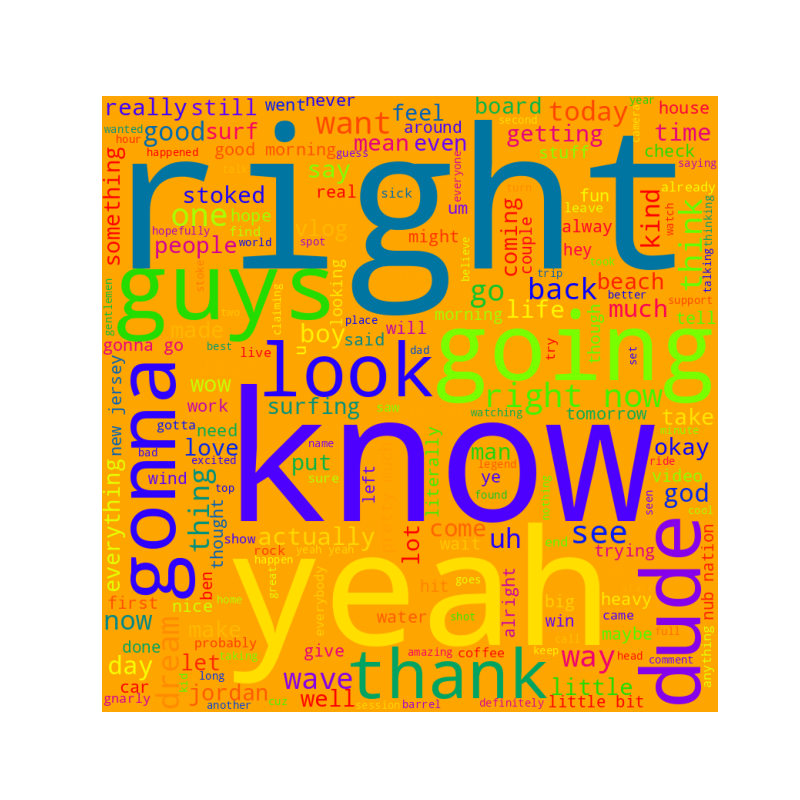

Later versions with and without masking

In the end I think the masking made it too hard to read the text, I’m going with the above colourful poster as my final version. Thanks for the video’s, Ben!